NinjaTech AI Unveils World’s Fastest Deep Research: Revolutionizing AI-Powered Information Analysis

NinjaTech AI and Cerebras Systems deliver 5x faster deep research, outperforming others by 5x speeds while transforming information analysis.

LOS ALTOS, CA, UNITED STATES, August 15, 2025 /EINPresswire.com/ -- NinjaTech AI, a Silicon Valley-based agentic AI company, in partnership with Cerebras Systems today announced the launch of the world’s fastest Deep Research, designed to transform how organizations discover, analyze, and leverage information. This breakthrough researches and processes complex data up to 5x faster than competing solutions, setting a new industry standard benchmark for AI research capabilities.A Powerful Partnership Driving Innovation at Unprecedented Speed

The strategic partnership between NinjaTech AI and Cerebras has produced a solution that transforms how organizations discover, analyze, and leverage information. SuperNinja Fast Deep Research delivers complex sequences of reasoning, source evaluation, and synthesis that once took 10+ minutes in just 1-2 minutes, without compromising on quality or depth of analysis.

“SuperNinja is an AI agent with its own virtual computer, able to perform powerful research, analysis, and coding for users autonomously. Through our partnership with Cerebras, we’ve dramatically increased the speed of complex thinking cycles like Deep Research—unlocking powerful iterative use and making SuperNinja an even more valuable partner for both business and technical users,” said Babak Pahlvan, Co-Founder and CEO, NinjaTech AI.

Benchmarks Validate Leadership: Speed Without Sacrificing Accuracy

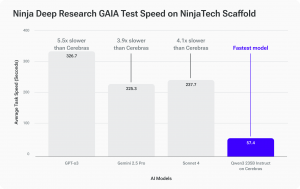

SuperNinja Fast Deep Research was rigorously tested using the GAIA benchmark– a widely used industry standard featuring 466 real-world questions requiring multi-step reasoning and tool use. The results demonstrate the technical advantages of the new architecture:

-Achieved 58.9% accuracy, comparable to top-tier models like Anthropic's Sonnet 4 (62.1%) and Gemini 2.5 Pro (62.6%), and outperforming OpenAI's GPT-O3 (54.5%)

-Completed GAIA tasks in an average of just 57.4 seconds—3.9× faster than Gemini 2.5 Pro, 4.1× faster than Sonnet 4, and 5.5× faster than GPT-O3

“Large language models are at their most magical when they deliver answers instantly. Cerebras’ wafer-scale engine runs the entire model entirely using on-chip SRAM, eliminating GPU bottlenecks and enabling SuperNinja to complete deep research tasks dramatically faster at the same accuracy,” said Andrew Feldman, Founder and CEO, Cerebras Systems

The Impact: Redefining Knowledge Work

SuperNinja Fast Deep Research is fundamentally changing how knowledge workers and leaders interact with information and make decisions by reducing complex research cycles from 10+ minutes to just 1-2 minutes, transforming the flow and rhythm of knowledge-intensive work. The ability to iterate accurately and rapidly enables an entirely new cognitive partnership between humans and AI while eliminating processing bottlenecks that have traditionally limited organizational agility. From financial analysts exploring 5x more scenarios to marketing teams analyzing audience research data in minutes versus hours, organizations will dramatically benefit from the technology.

How It Works

SuperNinja's Deep Research system uses a Plan & CodeACT scaffolding framework that turns the Qwen3-Instruct 235B model into a goal-driven researcher. This framework executes iterative validation, verification, and replanning loops, generating roughly twice the number of reasoning tokens compared to standard inference.

Cerebras' wafer-scale inference technology runs the entire model in on-chip SRAM, eliminating inter-GPU bottlenecks and accelerating the full scaffolding loop by 4-6× compared to traditional systems.

Availability

NinjaTech AI’s SuperNinja Fast Deep Research is available today at https://super.myninja.ai/. For additional information, visit https://www.ninjatech.ai.

About NinjaTech AI

NinjaTech AI is a Silicon Valley-based company building next-generation autonomous AI agents designed to execute complex tasks from start to finish. The company's leadership team brings over 30 years of combined AI experience with former senior leaders from Google, Meta, and AWS. NinjaTech AI is backed by Amazon's Alexa Fund, Stanford Research Institute (SRI), and Samsung Venture Fund.

About Cerebras Systems

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. We have come together to accelerate generative AI by building from the ground up a new class of AI supercomputer. Our flagship product, the CS-3 system, is powered by the world’s largest and fastest commercially available AI processor, our Wafer-Scale Engine-3. CS-3s are quickly and easily clustered together to make the largest AI supercomputers in the world, and make placing models on the supercomputers dead simple by avoiding the complexity of distributed computing. Cerebras Inference delivers breakthrough inference speeds, empowering customers to create cutting-edge AI applications. Leading corporations, research institutions, and governments use Cerebras solutions for the development of pathbreaking proprietary models, and to train open-source models with millions of downloads. Cerebras solutions are available through the Cerebras Cloud and on-premises. For further information, visit cerebras.ai or follow us on LinkedIn, X and/or Threads.

Media Contact

NinjaTech AI

press@ninjatech.ai

Cerebras Systems

PR@zmcommunications.com

Armand Sanchez

Ninjatech AI

+1 408-345-5360

email us here

Visit us on social media:

LinkedIn

Instagram

Facebook

YouTube

TikTok

X

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.